Before and After Superintelligence Part I

— AI — 5 min read

Given access to artificial general intelligence (AGI), what life changes should I make? Assuming the arrival of artificial superintelligence (ASI), how can I best react before it it released, and in the days and weeks following.

Before superintelligence (during AGI), I want to focus on four things:

- Do mentally effortful things

- Build the right networks

- Place long AGI trades

- Develop Game Theory intuition + build game theory simulations with agents.

After superintelligence, I should:

- Form a small, conscientious team to be an aggressive player in the chaotic free for all that follows - politically and economically.

1. Definitions and Assumptions

Here, by AGI I mean an AI model that is as smart as a high performing professional in a given task. I believe this has already been reached. This essentially gives each of us access to an army of knowledge workers.

I use Superintelligence to mean AI smarter than the smartest human (Terence Tao in math for example). This stage also implicates a potential world in which models recursively self-improve. The advent of superintelligence would render all pure human intellectual labour meaningless.

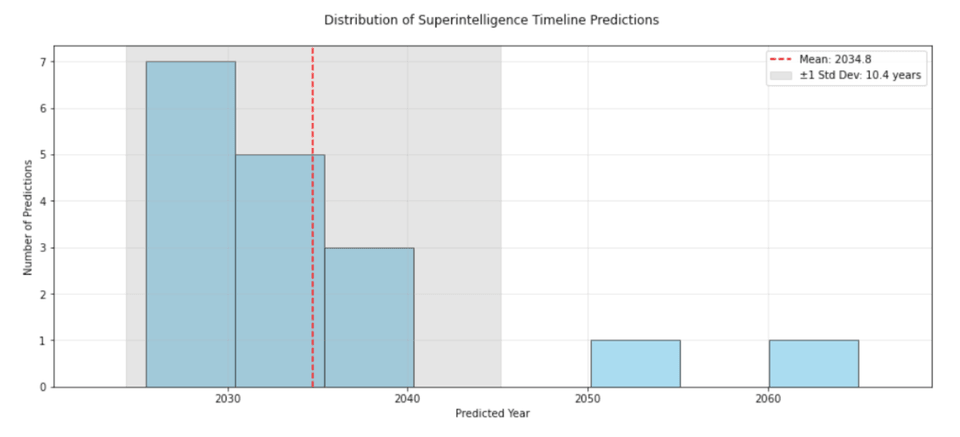

Note: I do not consider timelines on when superintelligence is achieved very useful, because there is Radical or Knightean Uncertainty. People do not forecast using the same definitions, and the variance is incredibly high as demonstrated in the following plot from those close to the metal.

Many people draw on different reasons AI will get better:

- The most convincing reason I find is that teaching reasoning through showing the models traces of reasoning from human domain experts can inherit deep flaws that we base our understanding of the world on. 5000 years ago, we would've trained these models on animism, 3000 years ago on theism, 300 years ago on newtonian mechanics, and 50 years ago on quantum mechanics.1

- More efficient mechanisms of thought surely exist, using non-human languages that may for example utilize symbolic, distributed, continuous, or differentiable computations. A self-learning system can in principle discover or improve such approaches by learning how to think from experience.

2. Before Superintelligence

a) Do mentally effortful things

I plan on fighting the urge to skip mental effort, both deliberately and through setting up a learning system that doesn't do the meaningful work for me.

While AGI's made it easier to complete tasks and gain knowledge, it's made it harder to expend mental effort. This is important because the upper bound of agency is a function of mental stamina/grit and creativity/imagination. It's also important because we need to be our sharpest selves on the unknown but fateful day superintelligence emerges (more on this later).

There are two problems with AGI on the individual level of learning and growth. First, it stunts our mental stamina, and so our ability to ask the right question and general creativity also diminish. Even asking AI to brainstorm, code, or automatically text back atrophies our cognitive abilities. But just like how fighter pilots are supported by advanced systems and personnel in order to conserve energy for rigorous personal training, AGI should function as a conscious, visible and powerful tool that enhances mental growth. Second, AGI leads to the overproduction but underdigestion of information, making it easy to forget what the tree of knowledge looks like. I catch myself asking ChatGPT a question that I can formulate but barely understand, and falsely feeling satisfied for reading the response. Caching 'atomic' knowledge, remembering what is foundational, is critical.

Collectively, AGI will foster groupthink and a willingness to unquestionably accept the views of technocratic and social authority. As models continue to proclaim plausible statements faster than they can be dissected, people will disagree less. Also as AI leads to vivid, realistic simulations (briefly explained in d)), people will view the world less distributionally and instead as predetermined. The change is to challenge authority views more.

Specifically, the goal is to become a self-aware cyborg: to have wafer-thin but unambiguous boundaries between my own cognition and what AI use is doing in my learning and thinking processes, benefitting from the speed and encyclopaedic breadth of AGI without sacrificing on real thinking.

b) Grow Emotional and Social Skills

I plan on continuing to spend time challenging and exploring myself through social adventure.

I believe human relationships and authenticity will become increasingly precious. Having the right network becomes increasingly important. This is because, as Ishita said, knowing the right people makes achieving your goals easier.

Nurturing the constituent skills: effective communication, empathy, humour, spontaneity, come from exploring and challenging your own comfort level - traveling with friends, joining a dance group, sharing difficult conversations - the list goes on.

c) Place long AGI trades

I plan on placing trades on 'second derivative' effects of AGI, including growing wealth inequality, heightened desired to spend/borrow in the short term, fixed scarcity, and robotics.

The below is not financial advice and does not reflect the view of my employers

Like how the explosion of the internet was incredibly profitable for well positioned speculators, AGI presents a large opportunity. However, the major issue with AGI trades is that you are entering markets with extremely poorly informed speculators, i.e. the Dot Com bubble will happen with AI. These trades tend to be the ones with the most obvious exposure to AI 'factor risk', such as chips (NVIDIA) or foundation model/application companies (Alphabet, Meta).

I propose four trade ideas that I believe are 'second derivative', or are less likely to be crowded (as of February 2025).

- AGI will increase wealth inequality. More work gets done by fewer people/organisations. A trade could look like i) long luxury, short retail ii) long mega-cap equity short small-cap equity, or iii) long equity short bonds.

- People will take out huge loans to pay for model inference and training at a premium in the winner takes all dynamic of 'the lightcone'. This will raise borrowing rates. An example trade is i) short treasuries, ii) long floating rate notes.

- Trading scarcity. Few things will continue to be scarce in the world of ASI. Investors will flock to 'permanent' or 'rare' forms of value. Trades: i) long real estate ii) long gold.

- Downstream applications of AGI haven't had as much focus from retail investors yet. Trades: i) long robotics, ii) Short indian outsourcing workshops

- The major part of this is long robotics.

- Wearables companies will shoot in valuation as robotics companies unlock the ability to move in complex environments and the data moat exists. A startup that collects realistic human movement data while performing tasks like factory work will be immensely valuable.

- Generally, it's harder for implied vol to be underpriced in a world of unprecedented change (update October 6th: I think there's a good chance it's an AI bubble and short term vol is overpriced. Long term vol is underpriced.)

d) Develop Game Theory Intuition + Build AI Game Theory Simulation

I plan on developing strong game theory intuition to better predict the world as AGI experiences step changes, and find further edge through building game theory Simulations with AI agents.

Because timelines are so uncertain, dynamic strategies, not static models matter when reacting to AI.

For example, consider time series data. There is only one simulation version we are fitting, a single thread. It's a very fragile thing to fit trends on, and it requires intuition and heart to find patterns others can't. When I'm making decisions, will have to rely on this a lot more. This naturally motivates game theory. I plan on developing intuition around it through playing thought experiments and going over canonical results from game theory.

I also see an unexplored opportunity in simulating complex game-theoretic scenarios using AI agents. Much of academic game theory operates with a narrow but critical gap that relies on stylized assumptions and not the complexities of real-world scenarios. This gap determines which Nash Equilibrium (NE) are likelier than others, or even when behavior deviates unexpectedly from expected NE dynamics. This is often because of the limitations of these simplified mathematical assumptions and the computational cost of explicit Dynamic Programming (DP) approaches.

Firms like Altera.AL have demonstrated the potential of AI-driven simulations, such as discovering emergent social behavior through interactions between Minecraft AI agents. However, building one would require an answer to questions like: how can these simulations be meaningfully grounded in reality, similar to how physics simulations derive significance? How do we quantify the error in a way that aligns with real-world distributions of events?

3. After superintelligence

This discussion of post-superintelligence assumes aligned AI— AI that does not exhibit behaviours like strategic self-preservation, where it might conceal its full capabilities to avoid shutdown. The effort to ensure this happens, alignment research, is arguably one of the most critical kinds of work. This is because if not, existential risks render our discussion moot.

I lack the imagination to spell out what a world with superintelligence would look like. High level, it will be a chaotic free-for-all where power is up for grabs, and events unfold at an extreme pace without a stable equilibrium. Critical questions are: Which resources should be secured first? What foundational moves take precedence? How do different players interact strategically? The best strategy is to form a coalition of highly capable individuals and seize as much control as possible when transformative AI emerges.

Likely outcomes include the fragmentation of nation-states into smaller, powerful political factions, increased opportunities for revolution (political and economic), and intensified cybersecurity threats. A prudent strategy involves securing substantial financial resources (large credit lines) in the coming years with this justification to leverage in the event of superintelligence for compute and talent.

Korean Version: 초지능 전후 (한국어)

Footnotes

-

Credit to Vedant Khanna ↩